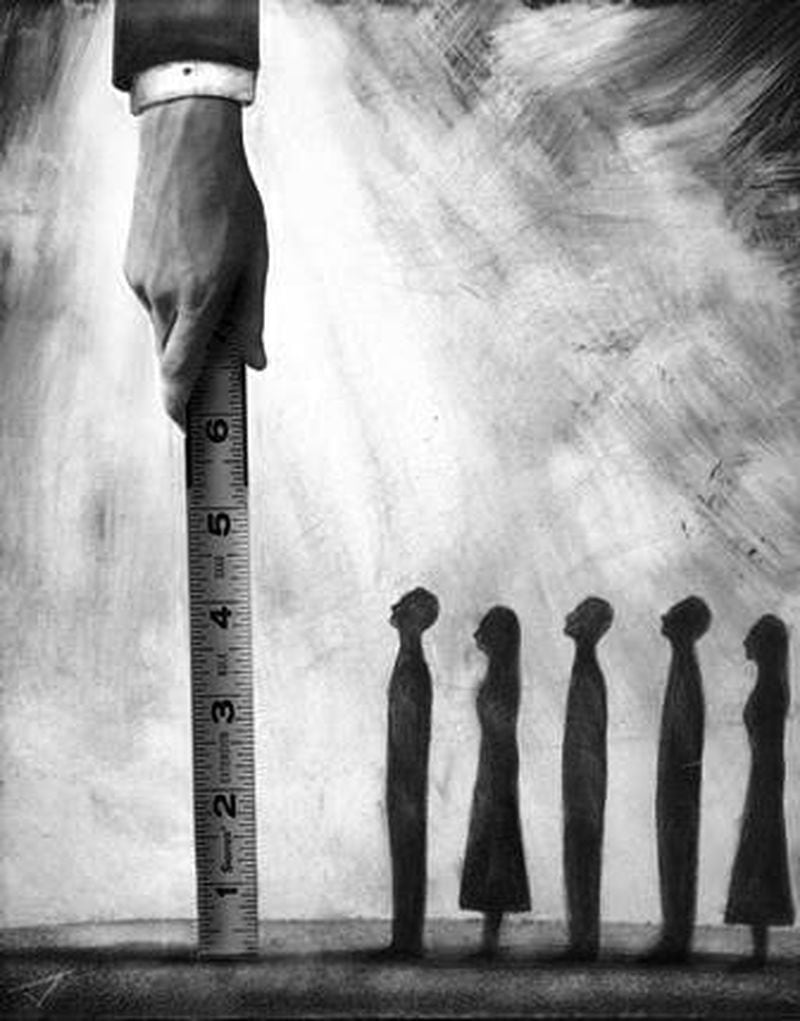

I understand the motivation and rationale behind teacher ratings. Of all the factors that influence student success within the control of public policy, teacher quality is now seen as the most important.

Credit: Maureen Downey

Credit: Maureen Downey

But initial attempts by states to measure teacher effectiveness have been clumsy at best.

Georgia's new rating system bases half of a teacher's score on the classroom observations of administrators and half on the academic growth of students. (Teachers are complaining the observation piece is not as thorough as it ought to be because administrators lack time.)

For teachers of subjects anchored by state exams, the process is at least clear -- teachers will be judged by the performance of their students on Georgia’s standardized math, language arts, social studies and science tests.

But 7 out of 10 Georgia teachers work in disciplines for which there are no state tests.

So, band students, for example, may take a pre-test – playing scales, sight-reading and multiple choice -- at the start of the school year and again at the end. About half of the band teacher’s overall rating will depend on the progress shown by students.

These alternative measures for teachers in non-tested subjects in Georgia are called Student Learning Objectives or SLOs.

Matt Underwood, executive director of the Atlanta Neighborhood Charter School, wrote a good blog entry on his concerns about SLOs:

Ok, you might ask, so these SLO assessments (like the P.E. one I saw that asked students to label a stick figure drawing of a volleyball serve) are pretty useless—they'll only take a little bit of class time to give, so what's the big deal? The "big deal" is that this aspect of TKES and LKES has already cost me alone about 23 hours of my time. That's trainings and workshops all related to SLOs in which I have had to participate over the past year. Now, as we get ready to do the same to our teachers of "non-tested subjects," I am deeply troubled that time that could be spent creating great lessons to inspire students' artistically, to help them develop into healthier young people, or to master a new language will instead be spent on an electronic platform learning how to upload and analyze data from a Scantron form based on a crappy test. And students as young as 1st grade will take as many as 7 different tests this school year to comply with the requirements of TKES, time that could be spent on real learning. If you'd like to argue that these are worthwhile trade-offs, please feel free to do so.

Georgia is not alone in trying to figure out a fair and practical way to assess how good a teacher is and how much students gain in the class. Georgia is among 20 states using academic growth to evaluate teachers; Georgia committed to do so as part of its $400 million Race to the Top grant.

This new AJC story from my colleagues Molly Bloom and Ty Tagami demonstrates the complexity of that commitment. This is only an excerpt of a longer piece on MyAJC.com.

By Molly Bloom and Ty Tagami.

For them, many school districts have come up with their own exams. But educators and research suggest this approach isn't good enough for evaluations that could make or break careers.

The new system for rating these teachers is open to cheating, educators say, because in some cases teachers administer and grade the very tests used to evaluate them. The quality of tests varies by district, meaning a Spanish teacher in Gwinnett could be graded differently than one in Atlanta. And there are concerns about fairness, because research shows teachers of non-state-tested subjects tend to score lower than those who teach courses where state standardized tests are given.

Educators have told the Georgia Department of Education there are problems with how teachers of non-state-tested subjects are evaluated, state reports on districts already using the new system show.

The tests and the cut-off scores that place teachers at different rating levels vary from district to district. Some districts --- like Atlanta Public Schools --- use multiple-choice tests to evaluate all teachers. Other districts combine multiple choice tests with other kinds of tests, like essays or how well music students, for example, play a C-major scale.

Carrie Staines, a teacher at Druid Hills High School in DeKalb County, said the quality of test questions in her district is poor. She should know: She was among the DeKalb teachers who volunteered to help write them. The Advanced Placement psychology test she wrote with two other teachers is far too short, at 20 questions, and reflects only "random" tidbits of knowledge that aren't necessarily crucial, she said.

The state monitors its standardized tests in math, reading and other areas for cheating, but security for these new, local tests is left up to individual districts. So far, the number of potential test-security problems reported has been "relatively low," King said. But Melissa King Rogers, an English teacher at Druid Hills High School in DeKalb County, said, "I think it's just wide open to the sorts of scandals we've seen in APS."