University of Georgia professor Peter Smagorinsky compares baseball and teaching in this essay. He explains how judging winning performances in both professions defies conventional measures and simplistic approaches.

If you have time between innings of the World Series tonight, take a look.

Credit: Maureen Downey

Credit: Maureen Downey

By Peter

With the World Series upon us, let us turn to baseball. In "Moneyball," author Michael Lewis provides a close look into the management of baseball teams, in particular how scouts and executives evaluate players. Traditionally, baseball men have relied on three offensive statistics—batting average, home runs, and runs batted in—as the three principal indicators of production and value.

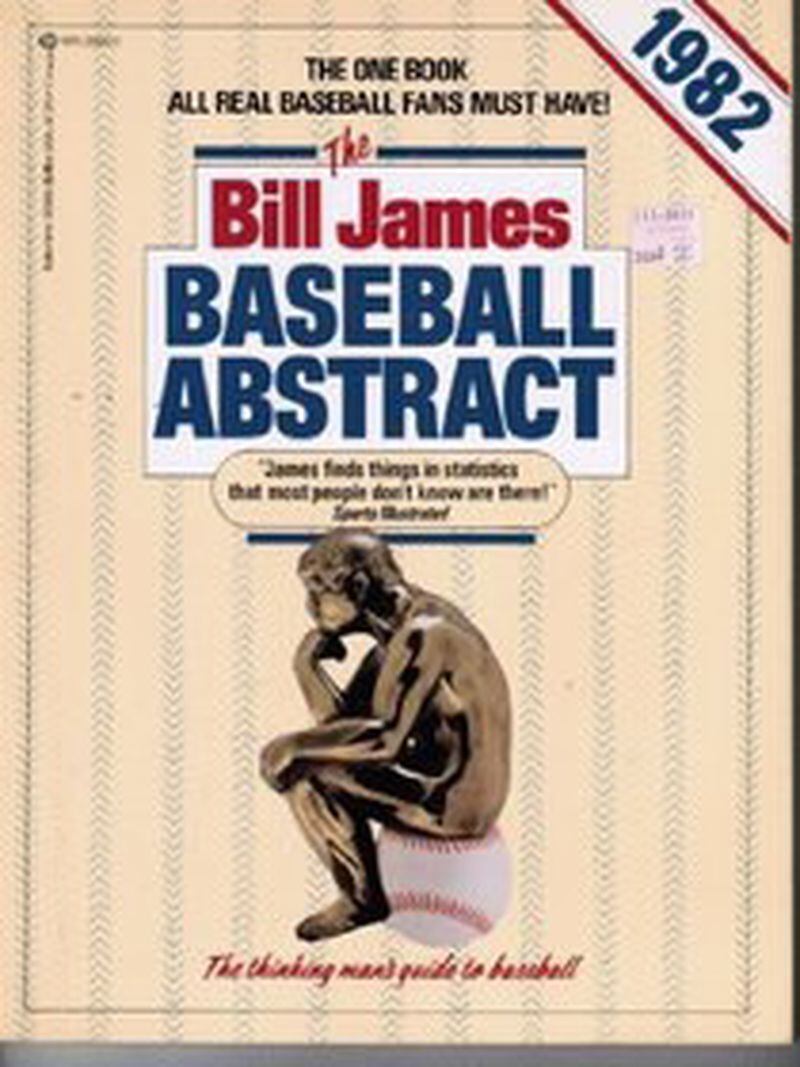

In the 1970s, however, Bill James began questioning whether those three statistics indeed provided the best measures of performance. To illustrate his doubt, he gave an example that I will attempt to reconstruct from ancient memory, providing some more modern embellishments, such that details will vary. James's example concerned two outfielders, each of whom hit .250, hit 25 home runs, and had 100 RBI. Based on these "Triple Crown" statistics, baseball traditionalists would view them as equally productive.

Let's look a little deeper, said James. The first player, Goofus, struck out 100 times and walked 25 times; the other, Gallant, struck out 25 times and walked 100 times. Goofus hit into 50 double plays; Gallant hit into 5. Goofus is a left fielder, the outfield position for the player with the weakest arm; Gallant is a center fielder, one of the most important positions on a defense. Goofus played for the Rockies in Coors Field, baseball's best hitter's park, while Gallant played for Seattle, home to baseball's best pitcher's park.

And these are just offensive statistics; increasingly sophisticated defensive statistics further contribute to the evaluation of a player’s contributions. No single statistic predicts value; the aggregate, however, can profile quite accurately which players will provide a return on investment, barring injury or other less predictable variable; and which players are likely to perform below hopes and misplaced expectations.

The problem with traditional baseball statistics, according to Bill James, is they do not predict the kinds of production that produce wins. Over time, his field of sabermetrics has provided far more sophisticated means of measuring a wide variety of factors that add up to suggest a player's value. They have found some statistics, such as batting average, are of far less value than more nuanced measures such as on-base average. James and colleagues made the case that if you paid Goofus the same as you paid Gallant when they were free agents, based on their deceptive Triple Crown stats, you'd be overpaying for Goofus and underpaying for Gallant.

The proof of this approach’s success, to Michael Lewis, was in the poorly funded Oakland Athletics’ ability to compete for playoff spots against the league’s financial heavyweights year after year. By measuring the right things instead of the most obvious, a growing number of baseball executives such as Oakland’s Billy Beane were able to invest with savvy in undervalued talent and in players with selective, productive abilities in order to produce a winning team.

I am thinking of more than just baseball here. I am thinking of simplistic means of measurement in education. I won’t belabor the point, which so many of us make so often in this space, but I’m thinking in particular of the use of test scores as the sole means of measuring student performance and teacher effectiveness.

A batting champion who bats .350 but rarely draws walks is of less value than a player who hits .300 and draws 100 walks, because as the old adage says, a walk is as good as a hit — even though the league’s on-base percentage leader rarely gets the accolades that await a batting champion.

In classrooms, many factors go into being a good student, almost all of which are not counted in today’s accountability movement. In baseball, the quality of the Oakland A’s over time suggests measuring the right things can produce a winner at a low investment cost. Other teams undervalue what actually produces a win, falling back on statistics identified over a century before computers and electronic record-keeping made better means of measurement available.

Education is in a similar state as baseball right now, although with some differences. Standardized testing — one of many means of evaluating learning and teaching, and by many accounts among the easiest to reduce and most unreliable to employ — has highly sophisticated data bases and statistical means available to its supporters, including the widely discredited "value-added measurement" of teacher evaluation. Yet computers, no matter how sophisticated, are used to measure what's obvious instead of what matters.

Some might argue the tests are reliable because they tend to arrive at the same conclusions about the same students over and over. Politicians and policymakers love such simplistic thinking. The problem is that they are measuring what’s most easily measurable, not what education is conceived to accomplish.

I wonder what would happen if we do as sabermetricians have done with baseball to identify what really matters in measuring performances. In baseball, winning is the primary goal, and so it is different from education. Education needn’t be concerned with winners and losers, even though the current policymakers appear to think in terms of races to the top and other competitive metaphors that guarantee that when tests are normed, half of the population (and their teachers) will be labeled as underperforming.

Op-ed and blog essays are too brief to solve all educational conundrums; if you're interesting in a detailed proposal for authentic evaluation published in a peer-reviewed journal, see this . Here, I would simply say educational assessment would benefit from doing what baseball sabermetricians have done and find a wide range of indicators that contribute to profile a learner's academic growth.

Baseball statisticians, for instance, look at “park effects," the degree to which playing within a ballpark’s particular contours affects the statistics a player produces when on that team. Spacious parks depress power statistics, for instance.

In education, as so many have demonstrated, poverty depresses test scores and other measures of academic achievement, and for many reasons: hungry kids are prone to illness and have a difficult time concentrating on abstract schoolwork, they miss school and move frequently, they have few familial models of academic investment and success, they often grow up within a climate of distrust of public institutions, and so on. That's not an excuse; that's a fact.

Rates of poverty and affluence could serve among the many factors that thoughtful educators could take into account given the power of computers to parse out phenomena statistically. Many authors of essays in this space, including me, have written extensively here on more authentic conceptions of teaching and learning that could help round out the misleading profile available through test scores alone.

But it's much harder to think of how to value complex performance than it is to stick to tests developed ages ago for other purposes to provide a single number that purportedly encapsulates the whole of human growth and achievement. That's what kids are learning in school these days: Teaching and learning are simpleminded. I can't imagine that disposition to be of much value when they enter the world of work and find that it just ain't so.

About the Author